Since 2014 version of SQL server, there were implemented new approarches to help query optimizer better understand your SQL query and data estimation, for example correlation in predicates on the same table, correlation in predicates between tables, that outdated statistics can have newer data, etc…

More info can be found here: https://bit.ly/37ddnoe

Motivation to write this article was that there could be situations the cardinality estimator could make things worse. I am experienced in one of my projects that query stopped to finish in expected time after upgrading SQL server to higher version (from 2008 to 2016). I prepared simple example simulating that situation:

Let’s create table dbo . WrongEstimationTable, which have multiple relationships with another one table dbo.SameTable. I left dbo.WrongEstimationTable

empty, that there will be no rows in result set. So we would expect that execution plan estimates that no rows will be returned.

CREATE TABLE dbo.WrongEstimationTable(id1 BIGINT, id2 BIGINT,id3 BIGINT,id4 BIGINT,id5 BIGINT)

Fill dbo.SameTable with some testing data.

CREATE TABLE dbo.SameTable (id BIGINT,identityid INT IDENTITY(1,1),typeid INT) INSERT INTO dbo.SameTable(id,typeid) SELECT object_id,1 FROM sys.objects INSERT INTO dbo.SameTable(id,typeid) SELECT object_id,2 FROM sys.objects

Create indexes to help the query to get the data as fast as possible.

CREATE CLUSTERED INDEX CX_identityid ON dbo.SameTable(identityid ) CREATE UNIQUE NONCLUSTERED INDEX CX_id ON dbo.SameTable(typeid,id ) INCLUDE (identityid)

Run the query bellow where dbo.SameTable correlates with dbo.WrongEstimationTable using typeid predicate. Show actual execution plan on older version of SQL server or switch off Cardinality estimator if you use new version of SQL server, query bellow.

SELECT * FROM

dbo.WrongEstimationTable t

JOIN dbo.SameTable s1 ON s1.id=t.id1 AND s1.typeid=1

JOIN dbo.SameTable s2 ON s2.id=t.id2 AND s2.typeid=1

JOIN dbo.SameTable s3 ON s3.id=t.id3 AND s3.typeid=1

JOIN dbo.SameTable s4 ON s4.id=t.id4 AND s4.typeid=1

JOIN dbo.SameTable s5 ON s5.id=t.id5 AND s5.typeid=1

OPTION (USE HINT('FORCE_LEGACY_CARDINALITY_ESTIMATION'))

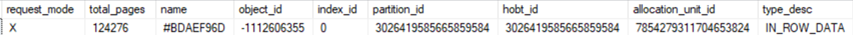

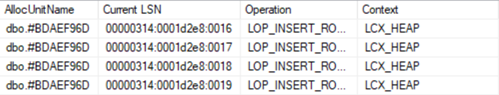

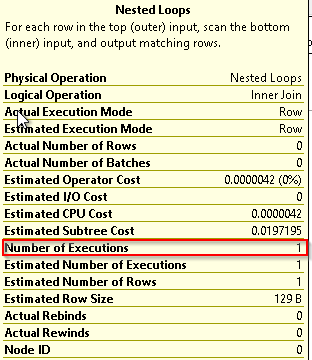

See that optimizer estimates that on the second one table there are no data, so the number of rows and executions are very low – one, as expected.

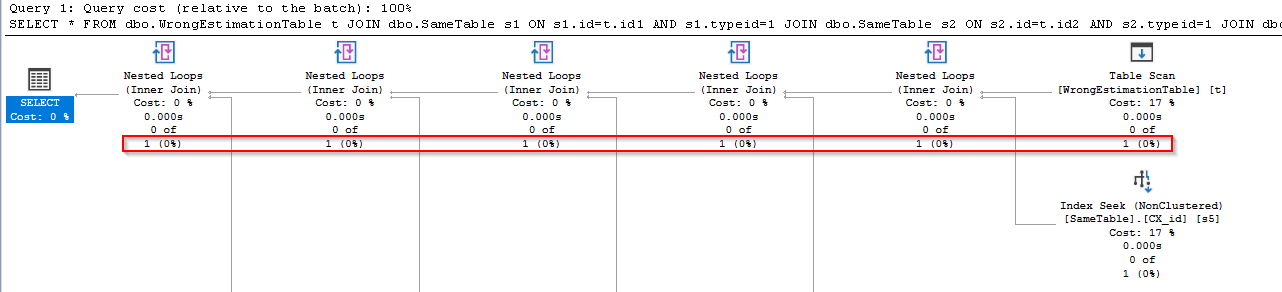

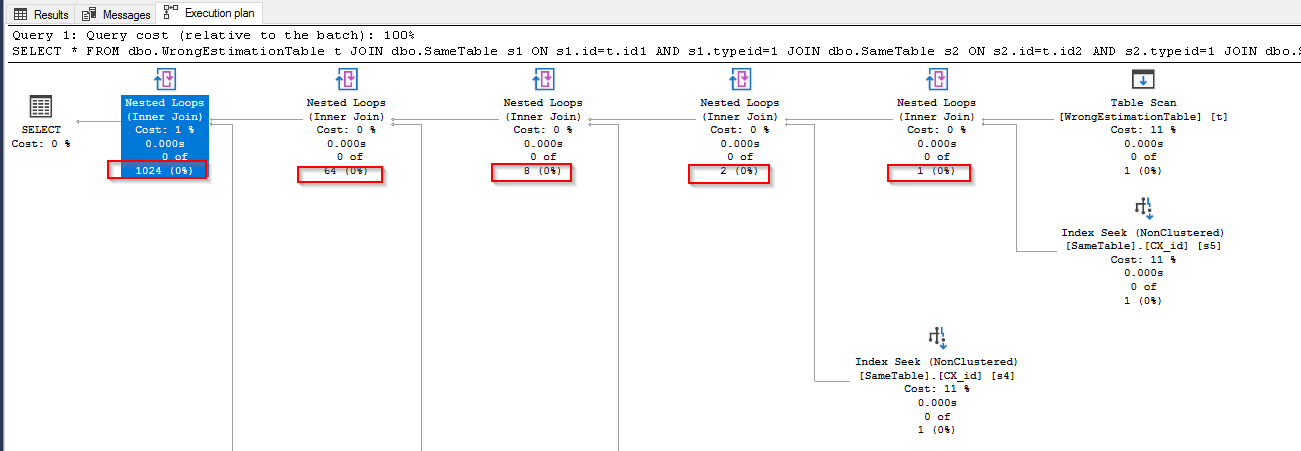

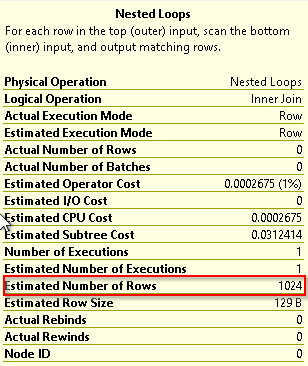

Running the same query on SQL server 2016 or earlier builds of SQL Server 2017 leads to very strange execution plan were optimizer expect increasing estimated number of rows in each branch of nested loop to 1024 estimated rows. As you can see on picture bellow. In production it could cause your query to prolong its execution time rapidly.

As solution you can:

- Rewrite the query

- Use the old version of Query Optimizer – it comes with Cumulative update 10 for SQL server 2017 https://bit.ly/2r3YyDO

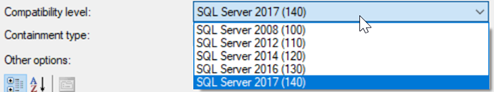

- Use the database compatibility level for 2008 (100)

- Switch off Cardinality Estimator use the old functionality of optimizer for Cardinality estimation

- Whatever I forgot

1.

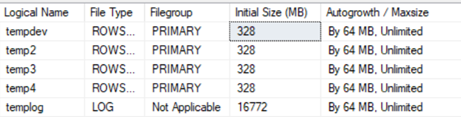

SELECT id,identityid INTO #tmp FROM dbo.SameTable WHERE typeid=1 CREATE CLUSTERED INDEX CX_identityid ON #tmp(id) CREATE UNIQUE NONCLUSTERED INDEX CX_id ON #tmp( id ) INCLUDE (identityid) SELECT * FROM dbo.WrongEstimationTable t JOIN #tmp s1 ON s1.id=t.id1 JOIN #tmp s2 ON s2.id=t.id2 JOIN #tmp s3 ON s3.id=t.id3 JOIN #tmp s4 ON s4.id=t.id4 JOIN #tmp s5 ON s5.id=t.id5

2.

SELECT *

FROM dbo.WrongEstimationTable t

JOIN dbo.SameTable s1 ON s1.id=t.id1 AND s1.typeid=1

JOIN dbo.SameTable s2 ON s2.id=t.id2 AND s2.typeid=1

JOIN dbo.SameTable s3 ON s3.id=t.id3 AND s3.typeid=1

JOIN dbo.SameTable s4 ON s4.id=t.id4 AND s4.typeid=1

JOIN dbo.SameTable s5 ON s5.id=t.id5 AND s5.typeid=1

OPTION (USE HINT('QUERY_OPTIMIZER_COMPATIBILITY_LEVEL_100'))

3.

4.

SELECT *

FROM dbo.WrongEstimationTable t

JOIN dbo.SameTable s1 ON s1.id=t.id1 AND s1.typeid=1

JOIN dbo.SameTable s2 ON s2.id=t.id2 AND s2.typeid=1

JOIN dbo.SameTable s3 ON s3.id=t.id3 AND s3.typeid=1

JOIN dbo.SameTable s4 ON s4.id=t.id4 AND s4.typeid=1

JOIN dbo.SameTable s5 ON s5.id=t.id5 AND s5.typeid=1

OPTION (USE HINT('FORCE_LEGACY_CARDINALITY_ESTIMATION'))

I was not able to simulate above described situation on SQL server 2017 with cumulative update 10, https://bit.ly/35egiev, seems the issue was resolved.

When migrating or upgrading your current version of SQL Server to higher version you should check description of new features or cumulative updates on MSDN. And you should be prepared on scenarios that things could be worse after your updates.